- Úvod

- Aplikační poznámky

- TCP vs UDP (eng)

In this section we will go over the differences between two common types of protocols - TCP and UDP. TCP (transmission control protocol) is the most standard protocol used. This is largely because, while slower then UDP, TCP is more reliable and allows for error-correction. This makes it ideal for loading websites and other sensitive things. UDP (user datagram protocol) is lightweight and fast, which makes it great for audio, video streaming and gaming for example. So, let’s go a bit more into details and have a look at each factor that influences the transmission speed when using TCP vs UDP..

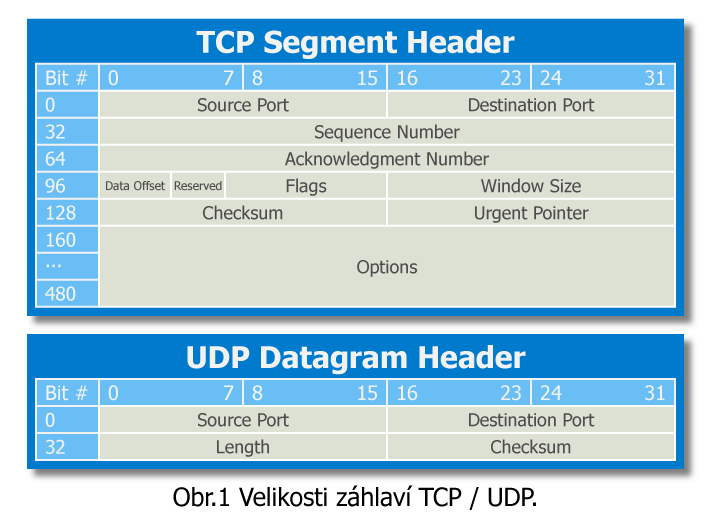

TCP has a lot of things going on at once, UDP is sending out messages without being held down by things such as recovery options. This leads into the purpose of Header Size. The standard size of a TCP packet has a minimum size of 20 bytes, and a maximum of 60 bytes. The UDP packet consists of only 8 bytes for each packet (Fig.1).

You may have noticed that we named both TCP and UDP as “packets”, though on illustration there appeared terms “TCP segment” and “UDP datagram”. Those terms are taken from precise terminology, while nowadays term “TCP/UDP packet” also appears both in formal and informal usage. In our case precise naming is not a point of interest, neither it influences the understanding problematics, so further we will also use term “packet” even if we refer to TCP segment or UDP datagram.

One of the principles of UDP is that we assume that all packets sent are received by the other party (or such kind of controls is executed at a different layer, for example by the application itself).

In theory or for some specific protocols (where no control is undertaken at a different layer; e.g., one-way transmissions), the rate at which packets can be sent by the sender is not impacted by the time required to deliver the packets to the other party (= latency). Whatever that time is, the sender will send a given number of packets per second, which depends on other factors (application, operating system, resources, …).

TCP is a more complex protocol as it integrates a mechanism which checks that all packets are correctly delivered. This mechanism is called acknowledgment: it consists of having the receiver transmit a specific packet or flag to the sender to confirm the proper reception of a packet. That means, that in simplest scenario the sender will not send another packet until acknowledgment from previously sent packet is received.

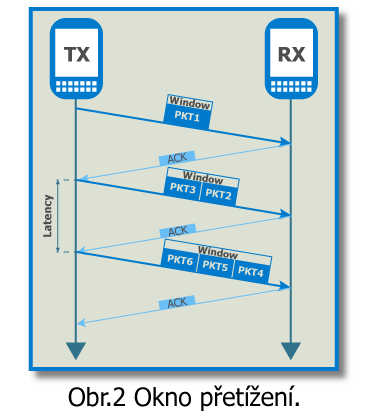

For efficiency purposes, not all packets will be acknowledged one by one; the sender does not wait for each acknowledgment before sending new packet. Indeed, the number of packets that may be sent before receiving the corresponding acknowledgement packet is managed by a value called TCP congestion window.

In lossless environment the sender will send the first batch of packets (corresponding to the TCP congestion window) and after receiving the acknowledgment packet, it will increase the TCP congestion window; progressively the number of packets that can be sent in a given time period will increase thus increasing the throughput (Fig.2). The delay before acknowledgment packets are received (= latency) will have an impact on how fast the TCP congestion window increases.

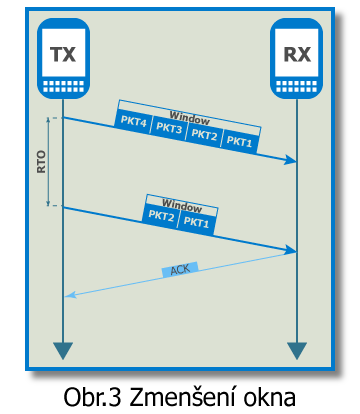

The TCP congestion window mechanism deals with missing acknowledgment packets as follows: if an acknowledgement packet is missing after a period of time, which is called Retransmission Timeout (RTO), the packet is considered as lost and the TCP congestion window is reduced by half (hence the throughput too); the TCP congestion window size can then restart increasing if acknowledgment packets are received properly (Fig3).

Packet loss will have two effects on the speed of transmission of data:

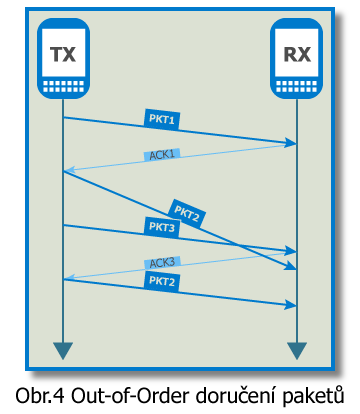

Another notable discrepancy with TCP vs UDP is that TCP provides an ordered delivery of data from user to server (and vice versa), whereas UDP is not dedicated to end-to-end communications, nor does it check the readiness of the receiver. When the receiving part gets TCP-based data in the wrong order an out-of-order errors occurs.

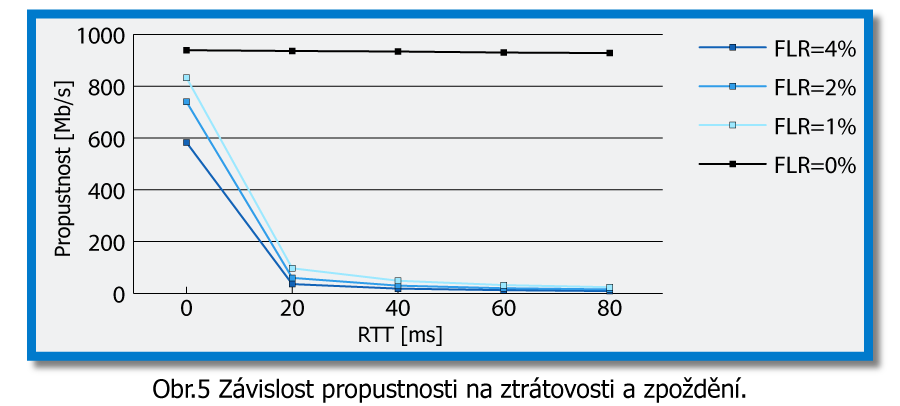

On the Fig.5 you can observe the graph of influence of latency and packet loss on TCP throughput.

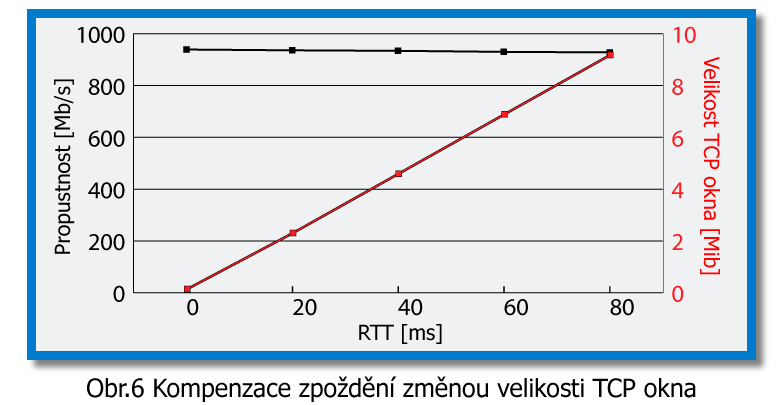

Where FLR = Frame Loss Ratio; RTT = Round-Trip Time. From the graph you may have noticed that throughput is almost not influenced by latency if there are no losses. It may sound controversial, but it does not mean, that latency does not affect TCP throughput, but rather shows us how effective TCP window scaling can be. Let’s have a look on Pic. 6 where both throughput and window size values are combined in one graph.

It is necessary to consider that tests were performed in PROFiber laboratory by utilizing 2 EXFO NetBlazers (connected via Dual Test Set) and an impairment generator Albedo NetStorm between them. Moreover, the delay was set to fixed value without any jitter or wander present in order to demonstrate the influence of particular parameters on throughput. Thus, the results should not be compared to results from the real network, as usually some latency fluctuation are present there.